TAGS

Korean

Natural language

NLP

Considers unique characteristics of the Korean language

Natural language processing(NLP) has been an on-going research throughout the world. However, there were limitations in utilizing open datasets as the basis for Korean NLP research because most of them were in english, which made it difficult to produce precise results taking the unique characteristics of the Korean language into consideration. In order to solve this problem, the startup Upstage held hands with 10 other institutes including Korea Advanced Institute of Science and Technology(KAIST), New York University(NYU), Naver, and Google to build KLUE benchmark, which stands for Korean Language Understanding Evaluation. KLUE aims to build an evaluation system to establish fair comparison across Korean language models. DATUMO has taken part in forming KLUE by providing and processing data.

KLUE is a collection of 8 Korean natural language understanding (NLU) tasks, including Topic Classification, SemanticTextual Similarity, Natural Language Inference, Named Entity Recognition, Relation Extraction, Dependency Parsing, Machine Reading Comprehension, and Dialogue State Tracking. The dataset consists of original Korean used in daily life, rather than Korean translated from English, which allows higher precision in understanding and evaluation of the language. By providing the standards for accurate evaluation of various Korean language models, KLUE is definitely anticipated to accelerate the development of Korean NLP research.

Also, the significance of the KLUE project lies in the fact that it is the first licensed, open dataset ever in Korea.

About

Korean Language Understanding Evaluation

Datumo provides high quality data for smarter AI. As part of Datumo's Data Sponsorship Program, Datumo cooperated with Upstage in building the following dataset.

Upstage was founded by Sunghun Kim, who goes by Sung Kim, on October 2020, in order to accelerate the AI transformation of enterprises. Upstage searches for issues in enterprises that can be improved with AI technology and provides consultation on building basic AI models and systems according to their necessities.

Sung Kim, the founder of Upstage, has received the Most Influential Paper at ICSME 2018. He is also a four-time winner of the ACM SIGSOFT Distinguished Paper Award (ICSE 2007, ASE 2012, ICSE 2013 and ISSTA 2014). Sung Kim is an Associate Professor of Computer Science at the Hong Kong University of Science and Technology, and has led the Naver Clova AI team in Korea.

Upstage is planning on supporting enterprises with successful AI transformation by helping them develop their own group of professionals with deeper knowledge in AI. Upstage actively seeks to provide robust education based on their AI business experiences.

Testimonial

“While building the KLUE dataset with Datumo, we were most impressed by their data quality assurance system. Despite the intricacy of the data and the tight deadline, Datumo was able to provide specific guidelines for the workers to guarantee data consistency. They also made sure to train and select qualified workers, and inspect the entire dataset. We believe that KLUE, the representative Korean NLP benchmark dataset, was able to come into the world, owing to Datumo’s capability and passion.”

SungJoon Park, Upstage AI research engineer & Chief project manager of Project KLUE

Dataset specification

TC

- 210,000 Classification annotations (70,000 headlines * 3 topics)

STS

- 105,000 similarity score labels (15,000 pairs of sentences * 7 similarity scores)

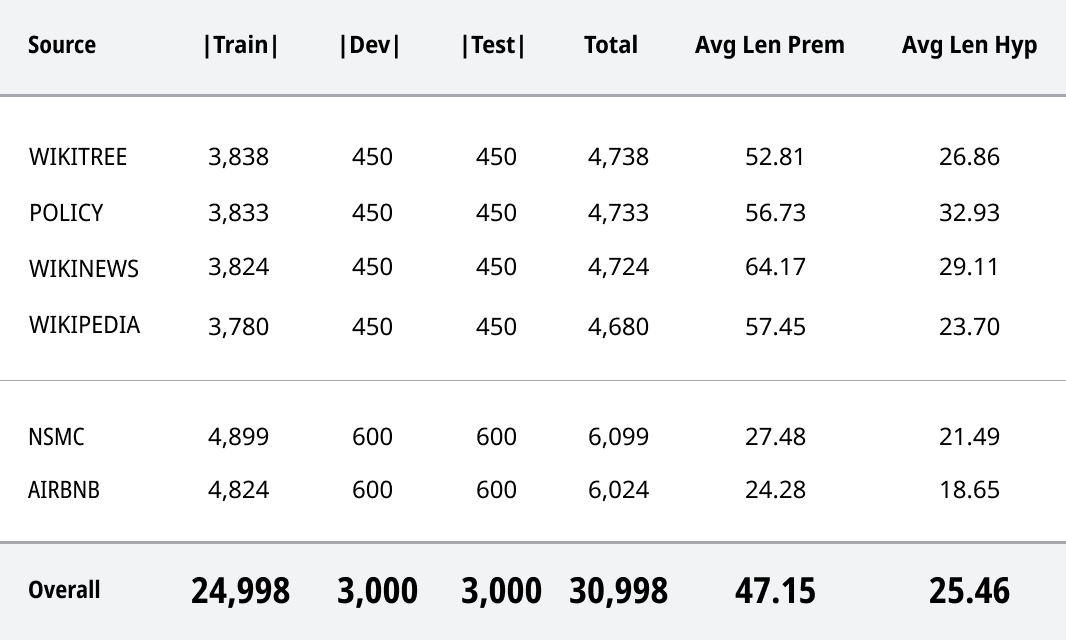

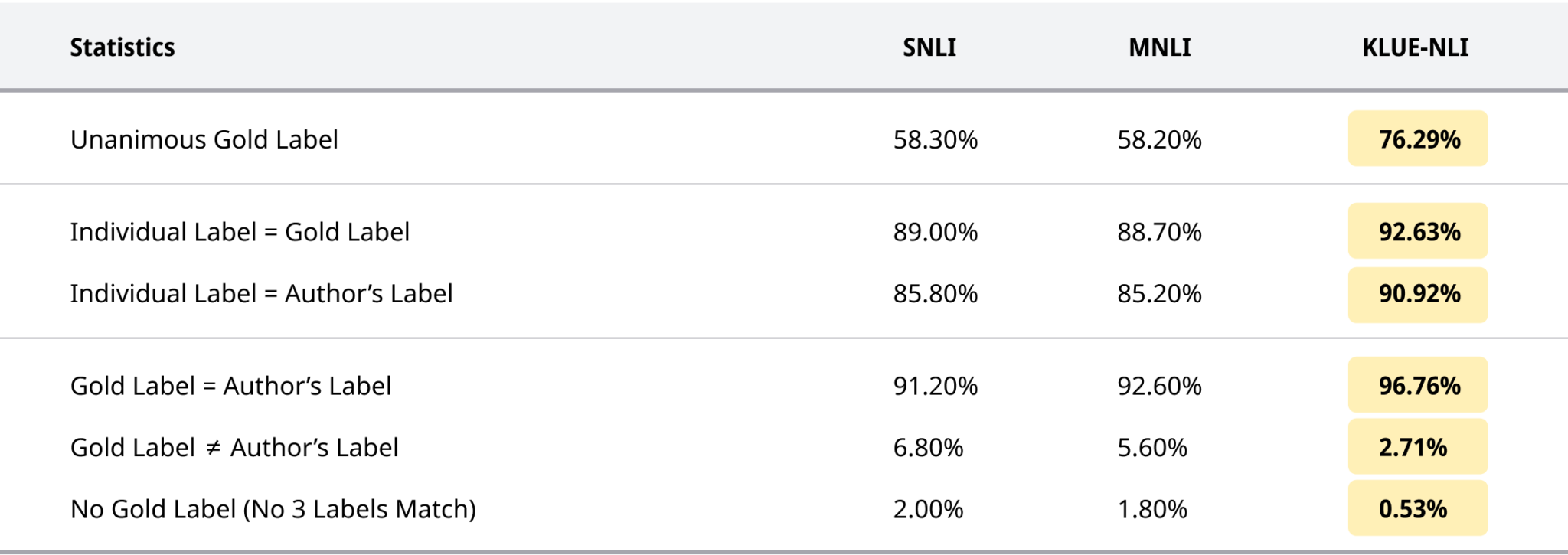

NLI

- 30,998 sentence pairs

Statistics for KLUE-NLI. The first three columns provide the number of sentence pairs for trains, developments, and tests. Set. The mean Len Prem and the mean Len Hyp are the average number of characters in the premise and hypothesis sentences, respectively._Source: KLUE Paper

MRC

- 29,313 questions (Type1: 12,207/ Type2: 7,895/ Type3: 9,211)

Process of annotation

Amongst the eight Korean natural language understanding (NLU) tasks, Datumo took responsibility for four: Topic Classification, Semantic Textual Similarity, Natural Language Inference, and Machine Reading Comprehension.

Especially for Topic Classification and Semantic Textual Similarity, qualified in-house workers participated for maximum accuracy.

As the host of the "AI Dataset Sponsorship Program", Datumo has also participated in the KLUE project as a sponsor.

Data Collection

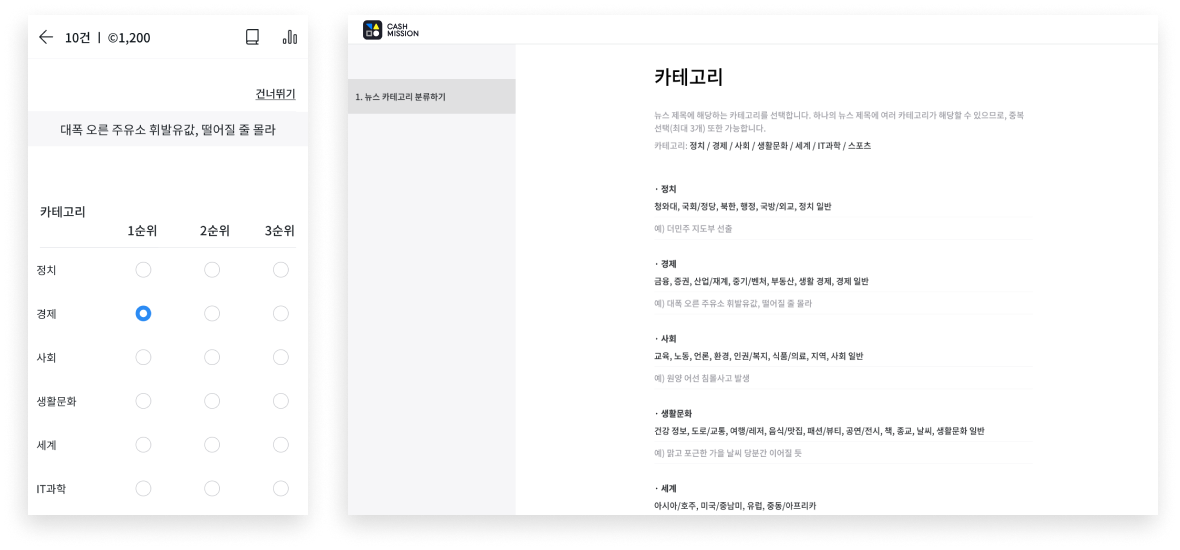

Topic Classification

Topic Classification aims to train a classifier to predict the topic of a given text snippet.

1) News headlines from online articles are collected.

2) For each headline, three annotators independently label three topics in order of relevance among seven categories.

→ Workers are requested to report any headline that includes personally identifiable information (PII), expresses social bias, or is hate speech.

The reported headline is discarded after manual review.

Portion of data were collected and labeled using Cash Mission, Datumo's crowd-sourcing platform.

Sample Data

{

"guid": "ynat-v1_dev_00000",

"title": "5억원 무이자 융자는 되고 7천만원 이사비는 안된다",

"predefined_news_category": "경제",

"label": "사회",

"annotations": {

"annotators": [

"18",

"03",

"15"

],

"annotations": {

"first-scope": [

"사회",

"사회",

"경제"

],

"second-scope": [

"해당없음",

"해당없음",

"사회"

],

"third-scope": [

"해당없음",

"해당없음",

"생활문화"

]

}

},

}

Data Collection

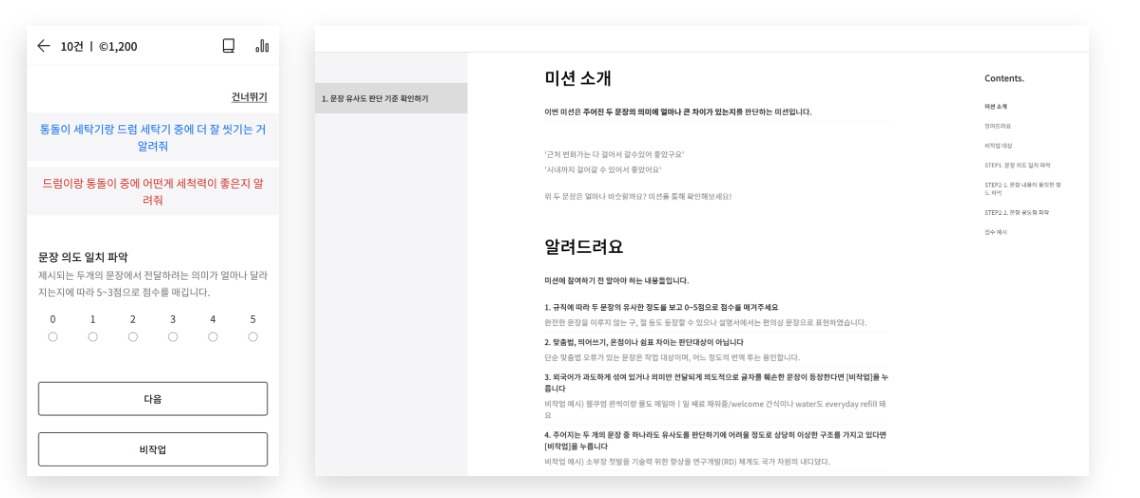

Semantic textual similarity

Semantic textual similarity (STS) is necessary to measure the degree of semantic equivalence between two sentences. STS is

essential to other NLP tasks such as machine translation, summarization, and question answering.

1) Compare the semantics of a pair of sentences - Evaluate the similarity based on the purpose or the meaning of the sentence, not

on the usage of same vocabulary.

2) Score the similarity by comparing "important" and "unimportant" contents - "Important" contents cover the purpose or the

meaning, while "unimportant" contents cover the nuance or expression of politeness.

Portion of data were collected and labeled using Cash Mission, Datumo's crowd-sourcing platform.

Sample Data

{

"guid": "klue-sts-v1_dev_00001",

"source": "airbnb-sampled",

"sentence1": "주요 관광지 모두 걸어서 이동가능합니다.",

"sentence2": "위치는 피렌체 중심가까지 걸어서 이동 가능합니다.",

"labels": {

"label": 1.4,

"real-label": 1.428571428571429,

"binary-label": 0

},

"annotations": {

"agreement": "0:4:3:0:0:0",

"annotators": [

"16",

"17",

"07",

"03",

"06",

"19",

"14"

],

"annotations": [

2,

1,

2,

2,

1,

1,

1

]

}

},

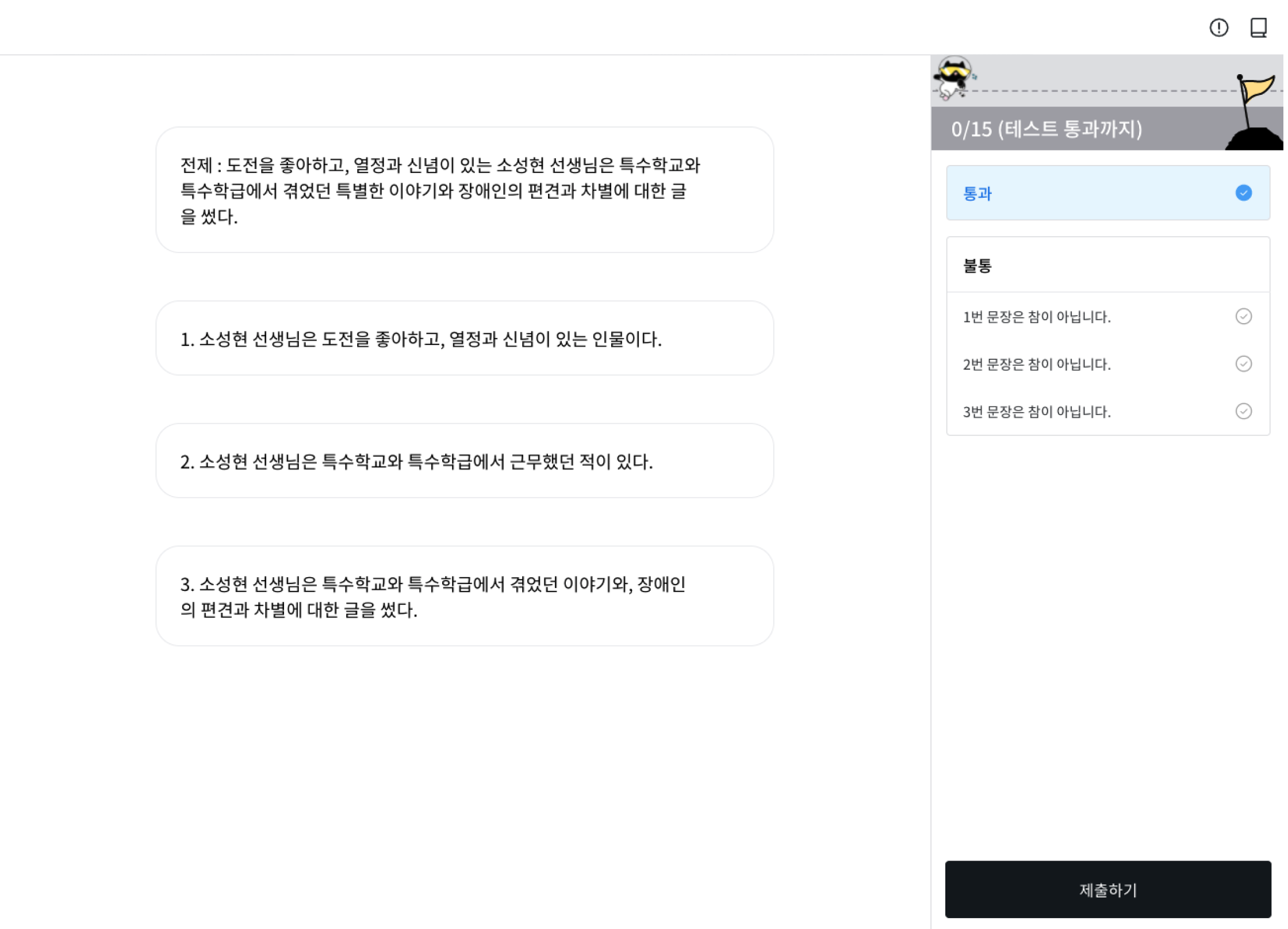

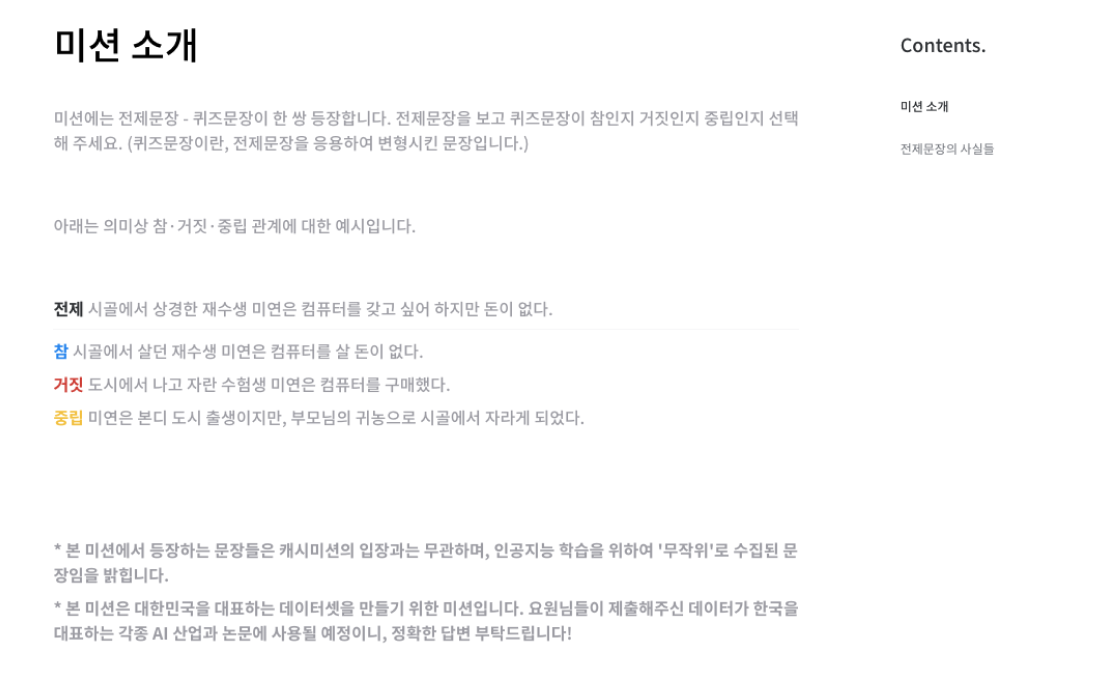

Data Collection

Natural Language Inference

The goal of natural language inference (NLI) is to train a model to infer the relationship between the hypothesis sentence and the premise sentence.

1) Based on the premise, annotator makes three hypothesis sentences that correspond to each of the three relationship classes(true/false/undetermined).

2) Four additional annotators label the relationship between the premise and the hypothesis, for validation.

KLUE-NLI, based on Datumo's data, performs higher accuracy compared to SNLI(Stanford Natural Language Inference) and MNLI(Multi-Genre Natural Language Inference).

Portion of data were collected and labeled using Cash Mission, Datumo's crowd-sourcing platform.

Sample Data

{

"guid": "klue-nli-v1_dev_00000",

"source": "airbnb",

"premise": "흡연자분들은 발코니가 있는 방이면 발코니에서 흡연이 가능합니다.",

"hypothesis": "어떤 방에서도 흡연은 금지됩니다.",

"gold_label": "contradiction",

"author": "contradiction",

"label2": "contradiction",

"label3": "contradiction",

"label4": "contradiction",

"label5": "contradiction"

},Data Collection

Machine Reading Comprehension

Machine reading comprehension (MRC) is a task designed to evaluate models’ abilities to read a given text passage and then answer a question about the passage, that is, its ability of comprehension.

1) Selected workers generate questions and label corresponding answers spans according to the Type.

2) Three inspectors are assigned for each question type to validate the generated questions and answers.

Question Types

- Question Paraphrasing (Type 1) :

Type 1 examples focus on paraphrasing the passage sentences when generating questions to reduce word overlap between them. The paraphrasing enables us to validate whether the model can correctly understand the semantics of the paraphrased question and infer the answer.

- Multiple-Sentence Reasoning (Type 2) :

Type 2 examples focus on making questions requiring multiple-sentence reasoning. Multiple-sentence reasoning requires models to derive answers from the questions by reasoning over at least two sentences in the passage.

- Unanswerable Questions (Type 3) :

Type 3 examples are questions unable to be answered within the given passage. We name these as ‘unanswerable’ questions.

Sample Data

Questions and answers are formulated based on given text.

한겨울에 봄꽃 ... 지구촌 곳곳 이상고온_한경_국제

유럽과 미국을 비록해 세계 각국에 이상고온 현상이 나타나고 있다. 겨울철 함박눈이 내려야 할 곳에 봄꽃까지 피고 있다. 따뜻한 날씨에 나들이객이 늘면서 음식점과 골프장 등은 반색하고 있지만 겨울철 옷이 팔리지 않아 의류업체들은 울상이다. 글로벌 에너지 회사들은 가뜩이나 저유가로 힘겨운 상황에서 난방 수요 감소라는 '악재'까지 만났다. 러시아 기상철에 따르면 혹독한 추위로 악명 높은 모스크바의 낮기온이 22일(현지시간) 7도까지 치솟았다. 1936년 이후 79년 만에 최고 기온으로 예년(평균 영하 6.5도)보다 10도 이상 높다. 기상 전문가들은 이상고온 원인을 엘니뇨 현상으로 설명하고 있다. 엘니뇨는 적도 부근 해수면 온도가 상승하는 현상으로 폭우나 가뭄, 겨울철 이상고온 등의 원인이 된다. 이상고온에 에너지 회사들은 전전긍긍하고 있다. 미국 대형 투자은행인 골드만삭스는 22일 발효한 보고서에서 “올겨울 이상고온으로 난방용 천연가스와 등유 소비가 감소하고 있다”며 “원유 공급 과잉과 세계 경제성장 둔화 등에 따라 약세가 이어지는 에너지 가격을 더욱 끌어내릴 것”이라고 전망했다. 유가가 더 떨어질 수 있다는 전망이 제기되면서 미국 3위 에너지업체 코노코필립스는 러시아 원유개발사업에서 완전히 철수하겠다고 발표했다. 유럽 최대 에너지업체 로열더치셸은 지난 4월 인수를 발표한 영국 천연가스업체 BG그룹에 대해 50억달러 상당의 투자를 축소할 계획이다.

sentence

- According to The Hydrometeorological Centre of Russia, Moscow, known for its cold weather, warmed up to 7 degrees Celsius in the afternoon of

22nd(local time).

Goldman Sachs, an American investment bank and financial services company, have reported on 22nd that the consumption of natural gas and oil for heating is decreasing and that oversupply of oil and hesitance in global economic growth will even lower the cost per unit of energy.

Question

- What was the highest temperature in Moscow on the day Goldman Sachs published their report?

Answer

- 7 degrees Celsius

Sample Data

{

"annotatable": {

"parts": [

"s1s1h1",

"s1s1v1"

]

},

"anncomplete": true,

"sources": [],

"metas": {},

"entities": [

{

"classId": "e_1",

"part": "s1s1v1",

"offsets": [

{

"start": 186,

"text": "러시아 기상청에 따르면 혹독한 추위로 악명 높은 모스크바의 낮기온이 22일(현지시간) 7도까지 치솟았다."

}

],

"coordinates": [],

"confidence": {

"state": "pre-added",

"who": [

"user:ryu"

],

"prob": 1

},

"fields": {},

"normalizations": {}

},

{

"classId": "e_2",

"part": "s1s1v1",

"offsets": [

{

"start": 234,

"text": "7도"

}

],

"coordinates": [],

"confidence": {

"state": "pre-added",

"who": [

"user:ryu"

],

"prob": 1

},

"fields": {},

"normalizations": {}

},

{

"classId": "e_1",

"part": "s1s1v1",

"offsets": [

{

"start": 751,

"text": "미국 대형 투자은행인 골드만삭스는 22일 발표한 보고서에서 "올겨울 이상고온으로 난방용 천연가스와 등유 소비가 감소하고 있다"며 "원유 공급 과잉과 세계 경제성장 둔화 등에 따라 약세가 이어지는 에너지 가격을 더욱 끌어내릴 것"이라고 전망했다."

}

],

"coordinates": [],

"confidence": {

"state": "pre-added",

"who": [

"user:ryu"

],

"prob": 1

},

"fields": {},

"normalizations": {}

}

],

"relations": []

}Text annotation results from tagtog

Applications

For those who want to develop

- high quality Korean NLP services-

- fluent machine translation, summarization, and question answering services

CC BY-SA

Reusers are allowed to distribute, remix, adapt, and build upon the material in any medium or format, even commercially, so long as attribution is given to the creator. If you remix, adapt, or build upon the material, you must license the modified material under identical terms.

https://creativecommons.org/licenses/by-sa/3.0/deed.en